Two teams of researchers at MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) have developed tools that allow soft robotic grippers to have better tactile sensing and proprioception—in other words, the robots can touch and feel, and they are "aware" of their own movements.

The researchers have published their findings in two papers, which they will present virtually at the 2020 International Conference on Robotics and Automation.

"Unlike many other soft tactile sensors, ours can be rapidly fabricated, retrofitted into grippers, and show sensitivity and reliability," MIT postdoctorate researcher Josie Hughes, the lead author on the first paper, said in a statement from MIT CSAIL. "We hope they provide a new method of soft sensing that can be applied to a wide range of different applications in manufacturing settings, like packing and lifting."

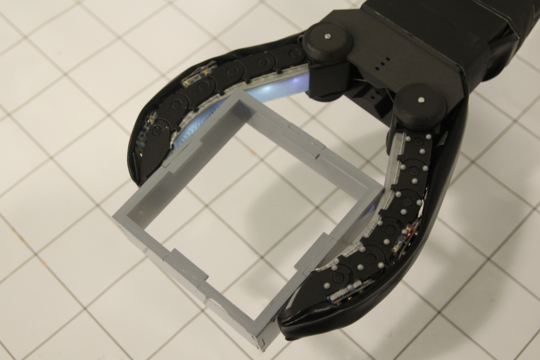

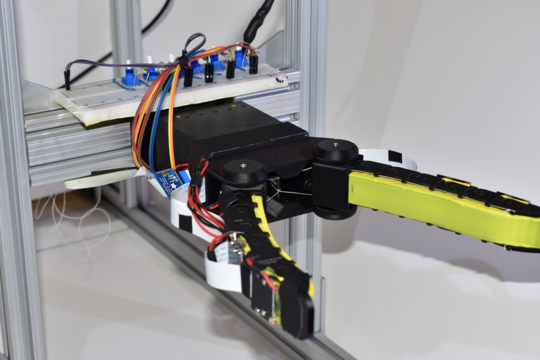

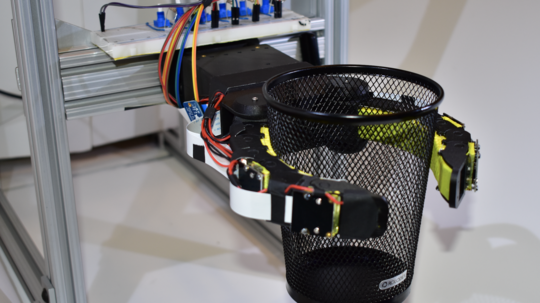

Hughes' team built off of research from MIT and Harvard last year, in which a team developed a new soft robotic gripper that collapses in on objects to pick them up. The researchers tacked on a new invention: tactile sensors, made from latex balloons connected to pressure transducers, which lets the grippers pick up and classify objects.

The sensors correctly identified ten objects with over 90 percent accuracy, even when an object slipped out of grip, according to the statement.

Hughes' team hopes to make the methodology scalable and eventually use the new sensors to create a fluidic sensing skin.

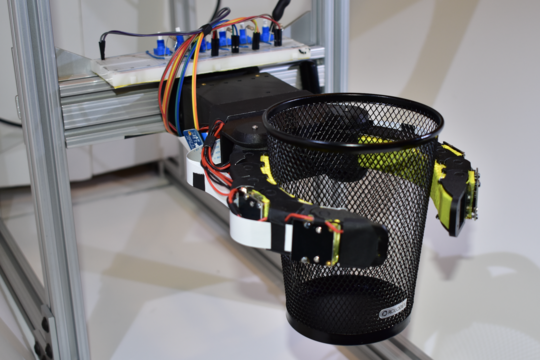

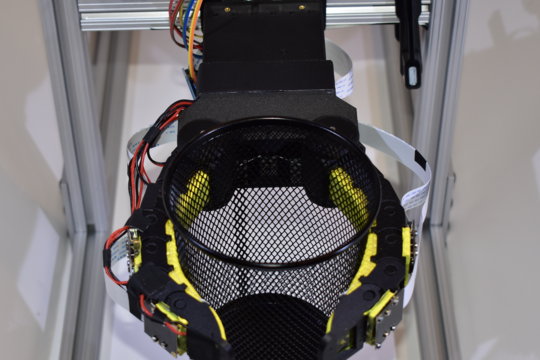

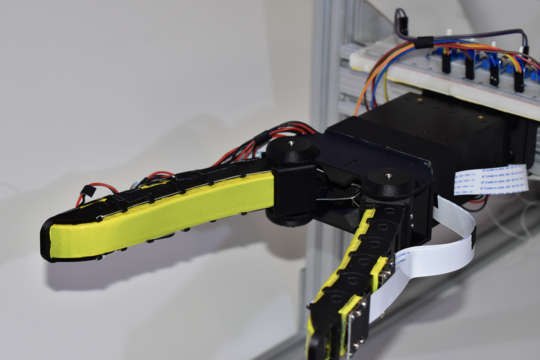

A second team led by Yu She created a soft robotic finger called "GelFlex." Equipped with embedded cameras and deep learning technology, the robot uses a tendon-driven mechanism to move its fingers. The system is aware of its own body positions and movements—a phenomenon called proprioception—and when tested on various metal objects, it could accurately recognize more than 96 percent of them.

Next, the team plans to improve GelFlex's algorithms and utilize vision-based sensors to estimate more complex finger configurations.

"By constraining soft fingers with a flexible exoskeleton, and performing high resolution sensing with embedded cameras, we open up a large range of capabilities for soft manipulators," She said in the statement.

Click or swipe through the gallery below for a look at the robots.