LinkedIn founder Reid Hoffman is pushing back on the "existential" and "theoretical" dangers that other industry leaders are highlighting about AI. Chief among them: That it could one day eradicate humanity.

There are more near-term, tangible threats that we should be talking about and addressing such as bad human actors, Hoffman said during a conference on Thursday which was hosted by Bloomberg Technology in San Francisco.

"One of the things that I think is dangerous about the existential discussion is it blinds us from some of the nearer things … AI is amplification intelligence," Hoffman said. "It amplifies human beings. And so we see a bunch of good things in human beings. AI tutors, AI doctors, a bunch of other things. There's also bad human beings, and so, what to do about bad human beings doing (things) with AI is also part of the portfolio mix of how you shape this technology."

Hoffman was an early investor in OpenAI and in March stepped down from the organization's board to prevent conflicts of interest and focus on another startup, Inflection AI, which he co-founded in 2022 with former Google DeepMind researcher Mustafa Suleyman and Karén Simonyan. Hoffman is also a partner at the venture capital firm Greylock Partners.

Inflection AI is developing what it describes as an AI personal assistant called Pi, which it launched in May. The Palo Alto startup is one of the top funded AI startups in the Bay Area. Inflection AI has raised at least $265 million and is reportedly seeking $675 million in fresh funding.

Others in the tech industry have been sounding apocalyptic alarm bells over the past year over generative AI since it has exploded into the global zeitgeist.

Hoffman isn't the only one pushing back on this rhetoric.

"We heard a lot of talk this morning about the threats to humanity, and obviously human extinction, it's kind of an important, big deal, I get it. But along the way, we shouldn't only think about human extinction. We should think about mapping basic ethics and norms onto this fantastically creative and powerful tool," Hilary Krane, the chief legal officer for Creative Artists Agency, said during a separate panel about art and AI during the Bloomberg Technology conference on Thursday.

Television and film writers are already asking for protections from the use of AI in Hollywood, and have voiced concerns during union contract negotiations about how the technology could be used to replace human screenwriters.

OpenAI sparked widespread curiosity and fear about artificial intelligence after it released its image and text generators last year, namely DALL-E 2 and ChatGPT.

OpenAI co-founder and CEO Sam Altman has been among those warning about AI's threat to humanity, and on Thursday, Altman said that no single company or person, not even himself, should be trusted with getting AI right.

"No one person should be trusted here. I don't have super voting shares. I don't want them. The board could fire me. I think that's important," Altman said on Thursday. "I think the board over time needs to get democratized to all of humanity. There's many ways that could be implemented. But the reason for our structure and the reason it's so weird, and one of the consequences of that weirdness is me having no equity, is we think this technology — the benefits, the access to it, the governance of it — belongs to humanity as a whole … You should not trust one company, and certainly not one person."

Altman has also been meeting with government leaders around the world to talk about these theoretical threats and how he thinks governments should regulate the technology.

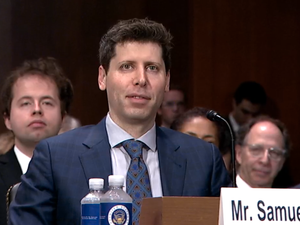

Altman testified at a Congressional hearing in May and told a Senate committee that AI should be regulated to mitigate potential harms.

"If this technology goes wrong, it can go quite wrong. And we want to be vocal about that," Altman told Congress, and "we want to work with the government to prevent that from happening."

OpenAI also reportedly lobbied for a watered down version of the European Union's AI Act last year, according to Time Magazine, and argued to E.U. regulators that OpenAI's general purpose AI software shouldn't be classified as a "high risk" general purpose system.

The non-profit organization Future of Life Institute published an open letter in March calling for a six month-long pause on developing advanced AI systems. Among its now-32,000 co-signers is Elon Musk, who has since also announced his intention to launch his own ChatGPT rival called "TruthGPT."

Musk co-founded OpenAI with Altman and others but left in 2018 amid a power struggle and disagreements about how the organization should be run. Both Musk and Altman were named among Time Magazine's "Most Influential" people of 2023.