Ampere Computing is promoting a more powerful version of its data center processor and an AI collaboration with Qualcomm Technologies in an annual report released on Thursday.

In the report, Ampere picks up on the theme it hit hard a year ago as the realization began to grow that ubiquitous AI, for all its possible benefits, could bring overwhelming energy demand.

"The current path is unsustainable," CEO Renee James said in a written statement that accompanied a video from Ampere.

A Goldman Sachs report this week estimated that data center demand could more than double by the end of decade, going from 1-2% of global power consumption to 3-4%.

Ampere is based in Silicon Valley but it has a 250-person presence in Portland's Pearl District. James is a former Intel president who founded the company in 2018 to make powerful server CPUs that aren't power hogs.

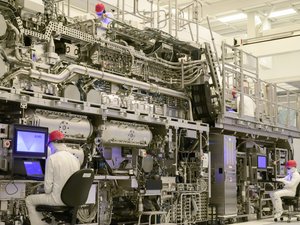

Last year Ampere introduced the AmpereOne flagship processor family. On Thursday it announced an upcoming souped-up AmpereOne chip — 12 channels and 256 cores, up from 8 channels and 192 cores. The chip will be made on the Taiwan Semiconductor Manufacturing Co.'s 3-nanometer process node. The earlier chip was made on the 5-nanometer node. Ampere claimed "40% more performance than any CPU in the market today" with the chip.

"We started down this path six years ago because it is clear it is the right path," James said. "Low power used to be synonymous with low performance. Ampere has proven that isn't true. We have pioneered the efficiency frontier of computing and delivered performance beyond legacy CPUs in an efficient computing envelope."

Ampere also said it's working with Qualcomm Technologies "to scale out a joint solution feature Ampere CPUs and Qualcomm Cloud AI100 Ultra." That's Qualcomm's portfolio of cloud AI inference cards. Inference is when machine learning model training is put to work to produce output, like when Chat GPT spits out answers to queries.

"This solution will tackle large-language model inferencing on the industry’s largest generative AI models," Ampere said.

No timetable on the collaboration was revealed.