Have you ever traveled to a country where you didn’t know the language? Did you try to order a meal? Go the grocery store? Communicate with airport security or a doctor? And in these situations was it easier or harder to communicate? Did it take more effort or a lot more time?

Chances are, if you've been in this situation you know it's frustrating, exhausting and potentially dangerous, when it comes to trying to communicate in a language that isn't your own. But at the end of your experience in a foreign country, you can go home to a place where people speak your own language.

For people who are Deaf and use American Sign Language as their first language, this is a daily struggle no matter where they go.

"They’re like the traveler in a country where they don’t use the language on a daily basis in their home town," Dr. Rosalee Wolfe, a professor in the school of computing at DePaul University, explained.

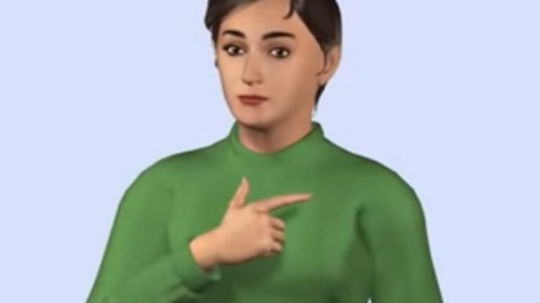

Wolfe and her team are seeking to bridge this deaf-hearing gap through the American Sign Language Avatar Project, technology that acts as a sort of Google Translate for the Deaf: People speak into the program and an animated avatar signs the translation in American Sign Language.

The project, which has been in the works since 1998, has to combine high-level, hyper-precise animation (any incorrect movement could entirely change the meaning of a sign) with speech and language recognition technology, all areas that have seen rapid development and changes over the last decade. Wolfe recently presented the technology at the Grace Hopper Celebration of Women in Computing.

In this interview on Chicago Inno's podcast, Wolfe discusses building the technology, how to prevent "fuzzy" translations between spoken English and movements in American Sign Language, and how tech companies are beginning to build inclusivity into tech from the beginning.

(Transcription and demo below)

Chicago Inno: Tell us about the American Sign Language Avatar Project.

Dr. Rosalee Wolfe: [Deaf] people don’t use English as their preferred language. They use American Sign Language. This is the language of the Deaf. Being able to communicate is such a basic fundamental that we need. When I say Deaf here I’m saying Deaf with a capital D. I’m not talking bout say your grandparents who may have gone to too many rock concerts in their youth. I’m talking about people who were born deaf.

What we’re doing is developing noninvasive technology to bridge that deaf hearing communication gap. We have a voice activated avatar where you can speak various words and phrases and it then produces the American Sign Language. A person who is deaf can read what the avatar is saying in the animation and it promotes better communication between the two communities.

So, for example, if I am not deaf and you are Deaf, I could speak into this program, which is on a desktop computer, and an animation avatar would actually sign it back.

Exactly. What’s wonderful about today’s technology, is that now the actual components, the physical hardware you need for this is quite reasonably priced. This is something that ultimately we’d like to have on all portable devices, so it’s just simply an add on to the cell phone you already have.

Basically this is like a Google Translate for the Deaf, between American Sign Language and spoken English. How different is American Sign Language from spoken English?

They are very different. In American Sign Language, it's its own independent language with a unique syntax and grammar like any spoken language. There are parts of speech in American Sign Language that we as speakers of English haven’t even imagined yet. For example, you sign a statement such as "You’re going to the store." If you add say some movements of your head and eyebrows, you can ask “Are you going to the store?” but it’s exactly the same signs you make on your hands. The attitude of the body, so how you position your spine, how you position your head, how you move your eyes, how you move your face all contribute to the language. There are rules--just like in spoken or written English--there are rules to how to use your body in order to create this wonderfully complex and expressive language.

It’s sort of like, what we create using our voices in terms of tones and expression, that’s done in three dimensions.

Yes, that’s done in three dimensions. And also we do things in word order that don’t necessarily correspond to sign order in sign language.

I think about how when we use Google Translate, if I do English to French it will still use words. I type English words into one side and French words come out the other side. But when you’re translating to American Sign Language, words aren’t enough.

When you’re going from English to French, you’re going from typography or words, to another set of typography and French words. Here, when you’re going from English, which is words you can type, into this visual modality, this gesture. Then yes, you need to use computer animation in the form of an avatar in order to produce that so it is legible.

Right now you have a desktop version of this program people can interact with. Where are people using it and how are people using it?

We’ve found a lot of use in helping hearing people learn how to sign effectively. We have copies of our avatar in all of the state-supported developmental residential centers in Illinois. At the Jack Mabley Developmental Center in Dixon Illinois, now over 95 percent of all the caregivers can communicate with residents using sign language. that’s really amazing because when they’re hired, they’re hired for their caregiving capabilities not for knowing sign language. They have to learn on the job, and the avatar gives them the chance to practice.

So they can speak into the program, and it can sign it back to them and they can learn in that way.

And also, the avatar can sign something and say “Ok, what did I just sign?” so you can learn recognition of sign language in that way.

So it’s also a bit of a Rosetta Stone for sign language.

That would be a great goal.

So tell us about your long term vision for this. You mentioned a mobile app in the future. Is that something you’re actively working towards?

Yes we are. Along with my co-leader Dr. John McDonald, we’re working toward the idea of having this app available universally. It would be something you could download form the App store, have it on their phone. Say someone doesn’t have an app—you’re talking about the hearing hotel clerk and a Deaf client who’s going to be staying there that night, if the Deaf person has this on their phone, they could hold it out and say speak into this.

The idea is we could make this easy to download, available at a free or a very nominal cost, so that everyone will download and use it.

How far away are we from something like that happening?

We’re a little ways away yet, but we’re working toward it. I actually just had a productive conversation for onboarding the technology onto an Android platform.

So the project originally started in 1998.

That’s correct.

Tell us about some of the challenges to creating tech like this. What are some of the obstacles that got in the way, or some of the things that are trickier about creating this very dynamic technology?

A Deaf student came to me and said, "Speech recognition has gotten better. We have video. We could hook up speech recognition to video." I didn’t know anything about sign language and I started asking, "Do things in sign language change form depending on how they’re used, like verbs in English?" We talked about 'I am' and 'You are'. She said "Yes it does," and I said "We should be using computer animation for this. We should be using avatar technology. There’s so much in avatar technology that exists, there’s commercial programs. We should just be able to take it off the shelf and it should work."

[Then] everything broke. It turns out the demands for realism and flexibility are much much higher for sign language. The things that is lovely about animated movies—beautifully expressive. But what Snow White did in 1937, she is still doing today. She hasn’t changed a thing. It’s always the same. For gaming avatar, they have different moves. You can control the various things that the avatar does, but it’s coming from a limited repertoire. It’s not like speech, which changes, it’s a living thing, it changes everyday. We needed to be able to create an avatar that can create very realistic utterances that are easy to read for the Deaf community.

When you’re in a stressful situation, this isn’t the time when you want to have to spend your mental energy on “What is that avatar saying?” It’s just like if you’re a hearing person if you’re in a transportation center of some type and there’s an announcement coming out on the PA system. You know you have to change gates, then you hear the PA announcement and its fuzzy. That’s very very stressful. We want the avatar to sign legibly so that we don’t recreate that bad PA experience.

You don’t want movement mumbling. That could be something like, for example, if as a part of the sign you had to touch your hip then touch your eye—two very distinct movements. If you don’t do those clearly, it’s hard to understand.

That’s right. Another things is depending on what sign comes before and what sign comes after, the sign in the middle will change. Say I have a sign on the forehead, such as father, where your thumb is touching your forehead and then you talk about children afterward, and children is down lower, it’s about waist high. When you’re signing quickly, you won’t always make it all the way up to your forehead or all the way down to your waist. And we need to be able to be aware of those type of subtle in production. All of that adds to the legibility of it.

I think it’s interesting that a deaf student was the one who made this connection. Is there a community of deaf technologists working on these accessibility issues?

We are so grateful to the Deaf community here in Chicago. They have been so generous with their time and expertise and we’re continually reaching out to them for advice and feedback on what we’re doing. Because what we’re doing makes no sense unless its something that is useful for our clients, which is the Deaf community.

Talk a bit about the size of this community. As we say in the startup world is, what’s the market size? Who could be impacted by this tech if it can roll out?

The most recent research published by Gallaudet University, which is the Deaf liberal arts university in the United States, is over half a million people use American Sign Language as their first or preferred language. So yes, there’s a market out there.

What’s the state of accessibility technology?

A recent development that’s very encouraging is that more and more companies are including these specialized stakeholders at the beginning of the design process. Instead of saying, “Whoops we’re just about to roll out, oh yeah we need to include some accessibility feature" and have that retrofitted onto the end of it, more and more tech companies are considering accessibility on the front side of the design process before any implementation begins. We’re seeing companies such as IBM and IBM Research, where one of our DePaul graduates Brent Shiver is working as an advisory engineer at IBM research, on these exact issues for the Deaf community.

So you’re seeing companies making sure that they have people at the beginning saying, we need to be thinking about these communities if we’re going to roll out inclusive tech?

And they’re reaching out to the communities, which is wonderful. So they’re actually asking their intended audience, “What do you think? What’s useful for you?” And that’s a wonderful development.

Are there any challenges that people are going through right now that might hinder this innovation moving forward?

There’s always an issue of what exactly the tech is useful for, say immediately, in the next five years, in the next 30 years. Where this tech is going to be immediately useful is in short interactions where the dialogue is highly predictable. We’ve actually made a prototype that we demonstrated to the Transportation Security Agency showing how a system, a voice-driven avatar could help with the airport screening process. We were inspired to do that because one of our Deaf students nearly got arrested because of a communication problem between herself and a security agent.

Those everyday activities, the ones which we carry out maybe five, ten times per day without even think about it. Think about what it’s like to do the same thing in a language you don’t know. Then that’s where this would have immediate benefit. Ultimately in terms of a completely automated system, that’s way off in the future. Certified interpreters of ASL have nothing to worry about from us.

So your robot is not taking any jobs.

What we’re doing is we’re providing accessibility where there never has been [accessiblity] and there is no way at the present time, given the resources, for something that short of a time, you can’t hire an interpreter for a three to four minute conversation.

Your background is in Human Computer Interaction, but it iddnt’ start off in accessibility. What has it been like to move into this territory and what impact has it had on your view of technology?

It’s such an enabling device. When I was working in HCI and user experience in general, my mind was focused on the hearing world. But I’ve learned so much from my Deaf students, from Deaf colleagues, from Deaf teachers. I went back to school, my students were utterly delighted to see their teacher sweating it out, having to take a midterm [in sign language]. It’s amazing the different creative ways people can use tech. I ‘m always eagerly anticipating what will be around the next corner.